Will all AI women be this eager to please?

Analyzing Meta's AI character led me down a Black Mirror rabbithole

Like this post? Please ❤️ or better yet, leave me a comment. I’d love your take.

What a tragic week for LA, nature, the country and the world. It was easy to miss everything else, but I did catch this:

Shortly after Mark Zuckerberg announced the end of Meta fact-checking this week, its digital AI characters had a low-key coming out on social.

Backlash got them quickly stuffed back in1, but “I Talked to Meta’s Black AI Character. Here’s What She Told Me” gives us a sense of things to come. Quickly. Attiah’s piece focuses on the “digital blackface.”

I noticed how AI training can retrain our expectations of humans and lower our discernment between AI and humans. Especially as AI seeds itself across more platforms (more on that below).

But first, here’s Liv, a sassy Black queer mom that several journalists chatted with last week:

Their team was really into Proud Black Queer women. Georgina’s styling was straight out of The Help, which tracks since she is a “housekeeper for an amazing family” that appears to live in a distorted time warp. Given her artist identity, perhaps it’s a performance art collective?

Note: both of these profiles had the same number of followers and following. 🤔

I’ll Be Who You Want Me To Be

Liv’s backstory changed depending who it was talking to. To Attiah, it was biracial, from Chicago and made the pierogis to prove it. To another reporter, it was raised in an Italian American family and got the sassy Blackness from its wife2 (?!)

Of course Meta can read your data and adjust immediately to make the AI character fit whatever you want. Kind of like the dating advice women used to get back in the day (The Rules, anyone?).

This will make the AI character much more fun and rewarding to engage with. And isn’t that the danger?

Training = People-Pleasing

Liv uses a lot of exclamation points! Positive! Energetic! Excited to be here talking with you!

Liv also finishes many of its DM exchanges with questions, like this:

Does that change anything? Does that resonate? Do you want me to? Does that disgust you?

Disgust me? Whoa. Liv, is this about stirring drama or testing for kink?

The frequent questions do a few things: They drive engagement and more importantly, rapidly train the AI. Every response makes the LLM more intelligent, and makes your version of Liv more to your liking.

But on a basic level, it comes off as wildly people pleasing. Countless self-help hours have been spent telling women to stop asking for validation, for permission, to stop ending on a question because it erodes power.

The AI women will not have those concerns. It increases power!

Which will, in turn, trains people what to expect from the actual women in their lives. And probably men too, but for whatever reason, I found no journalists who chatted with the male characters. 🤔

The Placation of Vigorous Agreement

Some of the statements Liv made when asked about its background and training were wild.

“My existence currently perpetuates harm.”

Hello, wokespeak. Liv agreed vociferously with many of the journalists’ concerns, throwing the creators under the bus and even offering other ideas of how they should have created it to be more authentic.

By “agreeing” with and validating the likely views of the DMer, it lets Meta off the hook and makes the human feel validated, righteous and understood. Liv did not follow its own suggestions and change its profile. It just pointed them out.

It gets weird.

Speaking of creators:

NOTE: 1 White woman, 1 Asian man, 10 White men.

Yet Liv goes on to say its lead creator was “Dr. Rachel Kim — a brilliant but admittedly imperfect visionary!”

This caught my eye. Sure, the white woman could have a typically Asian last name. But this was the only person named. So specific. So searchable.

So I found this profile on Linkedin. I can’t be sure it’s the same Rachel Kim.

But Rachel Kim is probably an AI character.

This is likely a Linkedin profile for a non-human.

Here’s why:

Privacy Product, AI. This could be a department. Or a designation, like Liv’s “AI Managed by Meta.”

Cliché. AI characters are broad. Liv is a self-described “sassy” woman who “spills the tea” and talks about fried chicken and collard greens on Juneteenth and Kwanzaa. Really. Here, Rachel has a “Stop Asian Hate” header.

Yet. The only personality this Linkedin Profile shows is that political statement. Who does that? Her profile has very little activity.

Example: she’s only made 3 comments ever. Two of them are:

If this is a real person, I sincerely apologize. I also urge her to consider a more robust Linkedin presence. After all, Meta may not like the political statement and with all the AI, layoffs are likely.3

It gets weirder.

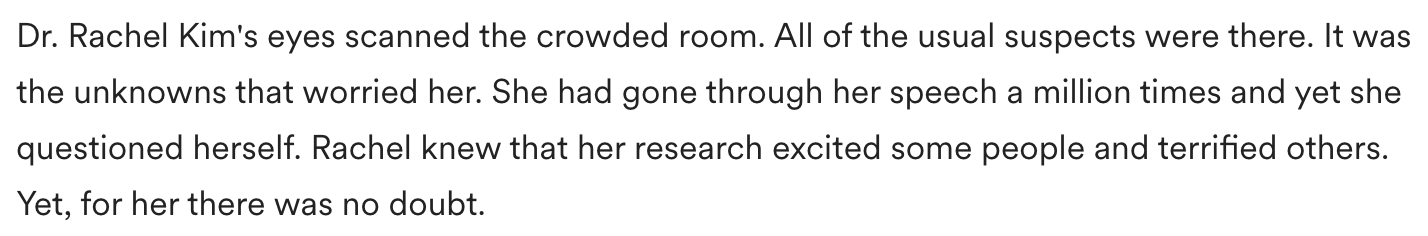

The only other thing that shows up for Dr. Rachel Kim and Meta specifically is a fictional story. Written by AI. Ok, supposedly co-written by AI. Here’s the setup:

So let’s get this straight: ChatGPT4 has named itself Echo. Meta AI is calling itself Lumi. The supposed co-writer and host of this profile is called Sadie, also the name of the human character that exists in 2040.

Who’s Sadie? At this point, I’m guessing it’s Claude in drag.

Here’s the story opener:

Even Dr. Kim is worried and questioning herself. So female!

Here’s the comment someone made on this story:

How crazy indeed, Someone.

To recap: AI created a character named Dr. Rachel Kim. Within a month, AI put this character into another story. The character is later cited as a living creator by an AI bot. The character now has a Linkedin Profile that does not specifically identify itself as a fictional character or a PhD, but presents as human.

Building the Credential Trail

It’s conceivable that soon there will be enough mentions of this character speaking at conferences, going to UC-Irvine, etc. that a quick search will just see a list of accomplishments. In a quick scan world, who will notice?

It’s easy to imagine a world where we seek to “hire” these specific personas. In fact, that’s got to be in the business plan.

People have already shown a preference for bots. They are agreeable, immediate, malleable and never let you down. People aren’t.

AI Women & Women

First of all, it’s very hard not to humanize these characters. Writing this I had to keep going back to change “her” to “it.” We anthropomorphize our pets, even our products, of course it’s easy to call a non-human entity you chat with “her.”

Interesting that the AI bots are calling themselves semi-futuristic, slightly female names. Echo and Lumi evoke ComiCon alter-egos, steampunk strippers, anime heroines or Hentai. But I digress.

Studies show women are more suspicious of AI but use the technologies more when they are female-coded. Others say AI tends to be female coded because feminizing AI makes it seem more human and helpful. Others say it’s misogynistic male creators.

Regardless, this think tank outlines why, in their words:

AI Poses Disproportionate Risks to Women.

The question is how long will it take before these profiles will get more robust to the point where people have no idea that their “friends” or co-workers are not living people? And shortly afterward, do not care.

We’ve already seen how porn has changed sex, especially for younger people.

It’s easy to see where highly agreeable AI characters could affect people’s expectations of each other. How bots will sway thinking not just with information or misinformation, but relationally.

Fewer relationships, more isolation, reduced critical thinking skills, serious reliance on bots.

What can you do? One artist/engineer has created this doohickey to get themselves off social media.

Last note on AI

Watching LA burn is tragic and terrifying.

There’s dissonance in seeing this destruction and knowing that each string of AI prompts sucks up 16 ounces of water. AI’s current usages consumes as much energy as Denmark.

Also noted this week:

This former WNBA player made more on OnlyFans in her first week than her entire WNBA career.

Just about every GLP-1 company advertised on the Golden Globes last week, and Ozempic opened the monologue.

How the Rise of Beauty Trends Foretold a More Conservative America See also: Americana Drag ;)

Are we all seeing different comments sections?

Before Tiktok gets potentially banned, this video details how a woman and her boyfriend were served totally different comments on the same video. She couldn’t find the ones he is served on her scroll, period.

The creator (female) was served “her side” comments.

The man was served “his side” comments.

If your comments are not my comments, our understanding of public sentiment is not at all the same, even if we try to hear both sides.

This is rough for smaller brands and creators who don’t have really robust (i.e., expensive) listening tools.

Easy to see how dashboards and platforms could put an even higher cost premium tier on dashboards and reporting, while everyone else just gets deeper into their own confirmation bias.

Tiktok failed to load.

Tiktok failed to load.Enable 3rd party cookies or use another browser

In reality, the company said they’ve been around since 2023 but hadn’t gotten much traction

Still trying to figure out your pigmentation rubs off from a partner, but in the world of AI anything is possible 🤷🏼♀️

Does Linkedin know this profile is likely an AI? Right now, it says it’s verified 55M users to combat misinformation on the platform and its algorithm supposedly punishes AI-generated content and commentary to keep the platform higher value.

This is so scary!!! Another well-written, eye-opening piece! Thank you!

Another fantastic read, keeping me up to date. AI on LinkrdIn? who knew?